We’ll increase conversions by

20-100+%

on your website.

Get a FREE Audit today

See how we can help your business increase conversion rates

The Complete A/B Testing Guide

What Is A/B Testing?

Are you tired of spending money on marketing campaigns without seeing the results you want? Do you feel like your website isn’t performing as well as it could be? It’s time to try A/B testing.

A/B testing is a powerful, testing tool that allows you to compare two versions of a webpage, email, or advertisement to determine which one performs better.

By analyzing user behavior and statistical significance, you can make data-driven decisions that will improve your conversion rates and revenue. In this complete A/B testing guide, we’ll walk you through everything from planning and executing tests to interpreting data and implementing winning variations.

Don’t miss out on this opportunity to optimize your website and grow your business. Let’s get started!

Benefits Of A/B Testing

A/B Testing is a powerful tool that can help businesses understand user behavior, optimize conversion rates, and ultimately increase revenue. By comparing two versions of a webpage or product to see which performs better with users, A/B testing provides actionable data to guide decision-making and drive business success.

Here’s What A/B Testing Means

A/B testing is a powerful method used by businesses to compare two versions of something, such as a web page or app, and determine which one performs better in terms of generating conversions and revenue.

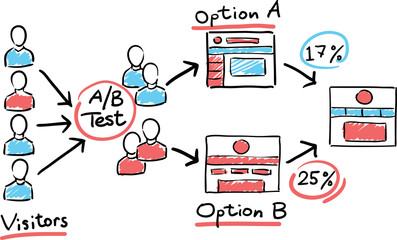

It involves dividing an audience into two equal groups and showing them different versions of the same website or product. The version that generates the most conversions is considered to be the winner, allowing businesses to optimize their marketing strategies for maximum output. There are also many service providers that can help with the A/B testing process.

A/B testing is sometimes called split testing because it splits traffic between two variations of the same observation. This methodology relies on basic statistical knowledge like calculating sample sizes and measuring statistical significance.

Thanks to A/B testing, businesses can gain deep insights into how customers interact with their products, making informed decisions about what changes will generate the most significant results for their users.

Benefits Of A/B Testing For Businesses

A/B testing has numerous benefits for businesses looking to improve their website or app performance. By comparing two versions of a webpage, businesses can identify which design elements are more effective in engaging users and driving conversions.

This data-driven approach allows companies to make informed decisions that result in higher conversion rates, reduced bounce rates, and increased user engagement.

A/B testing also helps businesses save time and money by eliminating the need for guesswork when making design changes. Rather than relying on assumptions about what works best, A/B testing provides concrete evidence of what resonates with users.

Additionally, by continually optimizing their webpages through A/B testing, businesses can stay ahead of the competition and ensure continued growth and success.

Examples Of Successful A/B Tests

A/B testing has proven to be an effective way for businesses to improve their conversion rates and increase revenue. For instance, Airbnb successfully used A/B testing to determine which headline was more attractive to visitors by changing just a few words. This simple test resulted in a 2% increase in bookings that helped generate millions of dollars in additional revenue.

Another example is the case study of Basecamp, which found that changing the color scheme on their pricing page from blue to green led to a 14% increase in conversions. This is because green was associated with growth and success, making it more appealing than blue.

These examples show how even small changes can have significant impacts on website performance when properly tested through A/B testing. It highlights the importance of incorporating continuous optimization into your marketing strategy for consistently better results.

Planning For A/B Testing: Setting Clear Objectives And Goals

Before running an A/B test or split test, it’s important to identify your target audience, choose the right elements to test, and set clear objectives and goals for what you want to achieve with the split test.

Identifying Target Audience

When it comes to A/B testing, identifying your target audience is crucial. This means narrowing down which group of people you want to test your variations on. This could be based on demographics such as age, location, or interests.

It’s important to not only understand who your target audience is but also their behavior and preferences. By analyzing user behavior data such as click through rate see-through rates and conversion rates, you can gain insights into what motivates them in order to create more effective versions for testing.

Remember that the more specific and targeted your audience is, the higher chance you have of achieving statistically significant results from your A/B tests. So take the time to carefully identify and research your ideal customers before launching any experiments.

Choosing The Right Elements To Test

When it comes to A/B testing, choosing the right elements to test is crucial. The first step in selecting what to test is identifying your target audience and their behavior patterns.

This can include anything from which landing pages and click through rate that they visit most frequently to how long they spend on your website.

Once you have a clear idea of who you are targeting, it’s time to choose the specific elements of your website or product that you want to test. These could range from small changes like button colors and font size, all the way up to major design overhauls or shifts in messaging strategy. By honing in on these specific variables, you can create more effective tests that provide data-driven insights for optimizing future marketing efforts.

Selecting The Right Testing Tool

Choosing the right tool for A/B testing can make a world of difference in achieving optimal results. With multiple options available, selecting the one that meets your needs and objectives is crucial.

Tools like Google Optimize, Optimizely, VWO, and more offer various features such as drag-and-drop editors, multivariate testing capabilities, and heat mapping to help you plan and execute successful A/B tests. It’s important to research each option thoroughly before making a selection based on factors like price, ease of use, support, and integrations with other tools.

Different businesses have different objectives with their websites or apps which means choosing an A/B testing tool should be based on relevant factors. Some of these factors might include the website pages or app functionality being tested (product descriptions vs sign-up forms), how much traffic will be allocated to each test variation (split-testing), existing data analysis (conversion rate conversion optimization) etc.

Conducting thorough research enables businesses to choose an optimal testing tool that aligns with their web marketing goals while ensuring reliable data is gathered for accurate analysis of variations in user behavior between two versions being compared in order to produce statistically significant results for future tests or changes/improvements made going forward.

Key Elements Of An A/B Test: Creating Effective Variations

To create effective variations for an A/B test, it’s important to allocate traffic and select the audience, determine the duration of the first test run, and incorporate statistical significance in the test run in order to produce reliable data.

Allocating Traffic And Selecting The Audience

Allocating traffic and selecting the audience are crucial elements to any A/B test. It’s important to determine how much traffic should be allocated to each variation, as this can affect the accuracy of your results.

Split testing is a common approach that involves dividing traffic evenly between variations, but it may not always be the best option depending on factors such as sample size and conversion rates.

Selecting the right audience for an A/B test is just as important as allocating traffic. You need to identify which segments of users you want to target based on their demographics, behavior, or other criteria.

This will help ensure that your results are relevant and actionable for your business goals. In addition, it’s important to consider any external factors that could impact user behavior during the test period, such as marketing campaigns or seasonal trends.

Determining The Duration Of The Test

Determining the duration of your A/B test is crucial to getting accurate and reliable results. The length of time you run your test determines how much data you gather, which ultimately affects the statistical significance of your results.

Generally, running a test for too short a period can lead to unreliable data, while running tests on it for too long increases the chances of external factors affecting the outcome of run tests.

To determine how long you should run your A/B tests, there are different factors to consider such as traffic volume, sample size, and conversion rate. Additionally, various statistical tests such as the T-test or Chi-squared tests can be used to calculate sample size and determine when an experiment has achieved statistical significance.

It’s important not just to rely on software solutions or intuition – but also to incorporate proper analysis techniques to ensure that you reach an appropriate level of confidence in your results.

Incorporating Statistical Significance

Incorporating statistical significance is crucial when conducting A/B testing to ensure that the results obtained are not just due to chance. Statistical significance helps determine whether a page variation has produced an actual improvement in performance, or if it’s just a fluke. Therefore, it’s important to set clear confidence intervals and sample sizes before running any tests.

When incorporating statistical significance in A/B testing, businesses need to test new product variants against existing products and evaluate their impact on customer behavior thoroughly. This can help identify effective variations that produce statistically significant improvements in conversion rates, bounce rates, or other key metrics.

By continuing with this approach over time, businesses can then gain valuable insights into user behavior which they can use for future tests and optimization efforts.

Conducting An A/B Test: Launching The Test And Gathering Data

The fifth step of A/B testing involves launching the test, gathering data post-test analysis, and interpreting the results to determine statistical significance and identify winning variations b the test itself.

Gathering And Interpreting Data

Once the A/B test is launched, it’s time to start gathering and interpreting data. This involves collecting both qualitative and quantitative data, such as multiple metrics such as click-through rates and user behavior on each variation. The goal of this phase is to gain insights into which variation is performing better.

To gather reliable data, it’s essential to have a sufficient sample size and wait for statistical significance before making any conclusions about conversion research. Once enough data has been collected, it’s crucial to review the results carefully and identify any trends or patterns that emerge. By analyzing the data gathered through A/B testing, businesses can make informed decisions about website optimization efforts and continuously improve their conversion rates over time.

Identifying Winning Variations

Identifying winning variations is the ultimate goal of A/B testing. It involves analyzing the data gathered from your test to determine which variation performed better than the other. It’s important to note that for a variation to be considered a winner, it must have achieved statistical significance.

Achieving statistical significance means that there is enough data collected and analyzed with confidence intervals indicating that any observed difference between two versions was not due to chance alone. This ensures reliable data and accurate insights into user behavior. Once you’ve identified your winning variation, you can implement it on your website or app and continue testing new ideas for continuous optimization.

Determining Statistical Significance

Determining statistical significance is a crucial step in conducting an A/B test. It involves analyzing the data collected during the experiment to determine if the differences between two versions of a web page are statistically significant results or enough to conclude that one version performs better than another. Statistical significance measures how confident we can be that the results are not due to chance, and it is essential for making informed decisions based on the A/B test results.

To determine statistical significance, confidence intervals are used to calculate the external validity and practical significance of the experiment. Choosing an appropriate statistical test also plays a critical role in determining whether there was a statistically significant difference between versions tested. By achieving statistically significant results, businesses can make data-driven decisions about which variation would perform best and ultimately increase conversions and revenue.

Implementing Test Results: Applying Winning Variations And Continuous Optimization

After identifying the winning variations in an A/B test, it’s crucial to implement them on your website and continue optimizing over time for better results.

Conducting Continuous Multivariate Testing And Optimization

Continuous testing and optimization are integral parts of A/B testing and conversion optimization. Once you have identified winning variations, it is crucial to apply them and continuously test duration to optimize your website to achieve better results. By doing so, you can keep improving your conversion rates over time.

To conduct continuous testing and optimization effectively, it is important to develop a hypothesis testing program that spans the entire customer life cycle. This includes initial user testing of landing page experiences, sign-up forms, product descriptions, check-out processes, and other critical touchpoints with customers. Experts at Google suggest deploying A/B testing within all customer interactions to improve the overall testing process and gather reliable data for future tests.

Avoiding Common A/B Testing Mistakes

To avoid common A/B testing mistakes, it’s important to test one variable at a time and properly consider factors such as statistical significance, feedback and customer behavior.

Testing Too Many Variables At Once

Testing too many variables at once is one of the most common A/B testing mistakes. When you test a few differences or multiple variations simultaneously, it becomes difficult to determine which specific element is affecting your results. It’s important to focus on testing one variable at a time in order to draw valid conclusions and make data-driven decisions.

Another reason why testing too many variants at once is not a good idea is that it can lead to invalid test results. If you violate the assumptions of the test, such as normal distribution or sample size requirements, the test has a higher false positive rate than you think. Therefore, it’s best to keep your tests simple and focus on producing statistically significant results by controlling for external factors and minimizing random error.

Not Testing For Statistical Significance

One of the most common mistakes in A/B testing is not testing for statistical significance. This mistake can lead to false positives and invalid conclusions too many tests, ultimately wasting time and resources. Statistical significance ensures that any observed differences between variations are not due to random chance but rather based on reliable data.

To achieve statistical significance, it’s important to have a large enough sample size and an appropriate level of the confidence interval. Without these factors, you may end up with inconclusive or erroneous results. In fact, over half of first-time A/B test users report not producing significant results, highlighting the importance of proper statistical analysis in A/B testing.

Ignoring Feedback And Results

Ignoring feedback and results is one of the most common mistakes in A/B testing. It’s crucial to pay attention to both positive and negative feedback, test ideas, and the test failed, as they can provide valuable insights into what works and what doesn’t. Failing to do so can lead to missed opportunities for improvement, resulting in less effective marketing campaigns.

In addition, ignoring test results can also be detrimental. Even if the outcome of a particular test isn’t what was expected or desired, it’s essential to analyze the data gathered properly. Ignoring results may cause incorrect conclusions and misinterpretations that further affect later tests’ outcomes since these are based on past measurements.

Overall, taking feedback from customers seriously while paying close attention to the test result analysis process is essential for any company seeking success through A/B testing. By avoiding this mistake and applying best practices throughout their testing cycles, businesses can gain invaluable information about how users interact with their products or services-which could be leveraged into increased conversion rates over time.

Not Considering External Factors

When conducting A/B testing, it’s important to keep external factors in mind. Ignoring these can negatively impact your test results and make them invalid. For instance, a marketing campaign or technical error could alter user behavior and affect the outcome of the test.

One way to avoid this is by running tests for an extended period of time. This will help capture any seasonal trends that may skew data during certain times of the year. Additionally, analyzing website data before conducting any tests can give insight into potential changes that may have occurred outside of your control.

By keeping external factors in mind during A/B testing, you’ll be able to produce more reliable and accurate results that drive successful optimization strategies for your business.

Best Practices For A/B Testing

In the “Best Practices for A/B Testing” section, we cover crucial tips such as testing one variable at a time, determining proper sample sizes and control groups, including qualitative data in your analysis, and conducting regular testing schedules. Don’t miss out on these essential best practices that can significantly impact your test results and improve your website’s conversion rates!

Testing One Variable At A Time

When conducting A/B tests, it’s crucial to test one variable at a time. It may be tempting to test multiple elements simultaneously, but doing so risks creating unreliable data and false positives. By testing one variable at a time, businesses can clearly identify which element had the most impact on conversion rates or other key performance indicators.

Splitting traffic between multiple variations of different variables can also lead to inconclusive results. By focusing on just one element such as copy or layout, businesses can produce statistically significant results with enough data gathered over an appropriate duration of time. In short, testing one variable at a time is a fundamental best practice that helps ensure reliable and accurate data interpretation for successful optimization efforts in conversion research and beyond.

Proper Sample Size And Control Groups

Proper sample size and control groups play a critical role in the success of an A/B test. Determining the right sample size helps ensure that statistical significance is achieved. In addition, it is important to have a control group that represents the existing version of your product or website. This allows for an accurate comparison between the two versions being tested.

To estimate the necessary sample size for technical analysis, it’s important to consider factors such as desired confidence interval and margin of error. These calculations can be done using online calculators or consulting with experts. Additionally, having a clear understanding of your target audience and key performance indicators will help determine the ideal control group for your A/B test.

Incorporating proper sample size and control groups into your A/B testing process will provide reliable data for making informed decisions on how to optimize conversion rates. By conducting controlled experiments and accurately measuring results, businesses can improve their marketing strategies to achieve greater success in reaching their goals.

Regular Testing Schedule

A regular testing schedule is a crucial aspect of A/B testing best practices. This involves conducting frequent tests to continuously improve website or app performance. By doing so, businesses can stay ahead of the competition and identify areas for improvement in their marketing strategies.

Moreover, regular testing allows marketers to collect reliable data and produce statistically significant results over time. It’s essential to track key performance indicators (KPIs) regularly to ensure that test variations are delivering improvements in conversion rates or other metrics relevant to your business goals. With a consistent approach, companies can achieve ongoing growth and optimization through A/B testing.

Including Qualitative Data

Including qualitative data in A/B testing can provide valuable insights into the user experience and emotional impact of a webpage or element. Qualitative data, such as feedback from user surveys or usability tests, can help identify areas that need improvement and guide the optimization process.

Using qualitative data alongside quantitative metrics like conversion rates can help create a more comprehensive view of how users interact with a webpage. For example, while quantitative data may show that Version B outperformed Version A, qualitative data could reveal that users found Version A to be more visually appealing or easier to navigate. By combining both types of data, businesses can make informed decisions on which elements to optimize for improved results.

Tools And Resources For A/B Testing

The guide will cover various A/B testing tools and resources including software platforms google analytics, expert consultants and agencies, as well as industry resources and guides to help businesses optimize their website’s conversion rates.

A/B Testing Software And Platforms

When it comes to A/B testing, selecting the right software or platform is crucial. There are plenty of options available in the market to conduct A/B testing, and choosing the best fit depends on a variety of factors such as budget, features needed, and ease of use. Some popular A/B testing software includes Optimizely, Google Optimize, VWO (Visual Website Optimizer), Hotjar, etc.

Each platform offers different functionality which ranges from basic split tests to more advanced multivariate tests that can test several elements simultaneously. Moreover, some platforms offer diverse integrations with other tools such as analytics or customer relationship management systems. It’s essential to evaluate these aspects before committing to an A/B testing tool that will help you achieve statistically significant results for your website or app.

Expert Consultants And Agencies

Expert consultants and agencies play a crucial role in A/B testing. They can provide businesses with the necessary tools and resources for successful testing, including specialized software and platforms like Google Analytics, Omniture, or Mixpanel. These tools help to identify areas of improvement on web pages, such as product descriptions or the sign-up form.

Moreover, expert consultants and agencies can offer useful insights into user behavior through qualitative research techniques like user testing or surveys. This type of qualitative analysis of website visitors’ data complements quantitative analysis by providing context for website visitors’ actions. Additionally, these professionals bring technical knowledge to ensure reliable data collection and analysis processes.

In conclusion, expert consultants and agencies can have a significant impact on businesses looking to improve conversion rates through A/B testing by providing valuable expertise that enhances their marketing strategy.

With their help, companies can optimize landing pages effectively while reducing false positives in results interpretation thanks to statistically significant tests run over enough time frames – all essential elements for achieving success in this field today!

Industry Resources And Guides

When it comes to A/B testing, there’s no shortage of industry resources and guides available online. From beginner-friendly articles to in-depth technical tutorials, businesses of all kinds can find practical guidance on creating and implementing effective A/B tests. Whether you’re looking for inspiration for test ideas or need help interpreting your data metrics, these resources are a great place to start.

One of the benefits of using industry resources and guides is that they often provide real-world examples of successful A/B tests. By learning from the experiences of other businesses, you can gain valuable insights into what works (and what doesn’t) when designing your own tests. Additionally, these resources may include case studies, user research findings, and best practices for achieving statistically significant results – all useful information for optimizing your conversion rates over time. So whether you’re new to A/B testing or an experienced optimizer looking to stay up-to-date with the latest trends, be sure to explore some of the many industry resources available at your fingertips!

Conclusion

In conclusion, A/B testing is a crucial tool for any business looking to optimize its website and increase conversions. By running effective tests and implementing winning variations, businesses can see significant improvements in revenue, conversion rate optimization and customer engagement.

It’s important to plan ahead, use the right tools, and gather reliable data when conducting A/B tests. Continuous optimization is key to staying ahead of the competition and achieving long-term success. So why wait? Start experimenting today with your website elements, test your ideas one at a time, and watch your conversion rates soar!

General Facts

1. “The Complete A/B Testing Guide” is a comprehensive guide to A/B testing for anyone looking to improve their website’s conversion rate, rates and revenue.

2. The guide includes definitions, best practices, and tools for running successful A/B tests.

3. A/B testing is a powerful technique for increasing conversions and revenue.

4. The guide includes a start-to-finish tutorial on how to run A/B tests.

5. The idea behind A/B testing is to show a new version variated version of a product to a sample of customers and compare it to the original version.

6. A/B testing can involve testing different CTA copies, their placement on a landing page of a web page, and even their size and color scheme.

7. A/B testing is a method of testing program gathering insight to collect data to aid in optimization.

8. Statistical analysis is a data metric used to determine the winner of an A/B test.

9. The guide includes expert tips and advice from professionals at Google and other companies.

10. A/B testing can be used in a variety of industries, including marketing, data science, and more.

Facts about the Definition of A/B Testing OR SPlIT TEsting, Understanding the Purpose and Benefits of A/B Testing

1. There are various definitions and explanations of A/B testing available from reputable sources such as VWO, Harvard Business Review, and Optimizely.

2. A/B testing is a method of comparing two versions of something, such as a web page or app, to determine which one performs better in terms of generating conversions and revenue.

3. A/B testing is often referred to as split testing and is based on statistical methods, although basic statistical knowledge is sufficient to generate meaningful results.

4. The purpose of A/B testing is to gather insights that aid in optimization, helping businesses improve their marketing strategies and increase their bottom line.

5. The Complete A/B Testing Guide offers a start-to-finish tutorial on how to run A/B tests, providing practical guidance for both beginners and pros.