We’ll increase conversions by

20-100+%

on your website.

Get a FREE Audit today

See how we can help your business increase conversion rates

AB Test Example

Introduction to A/B Testing

Are you tired of guessing which elements on your website or landing pages are performing the best?

Look no further than A/B testing, a powerful tool used to compare two versions and determine which one performs better.

Whether you’re an online store looking to increase conversions or a digital agency trying to improve user engagement, A/B testing can provide valuable insights into what resonates with your target audience.

In this blog post, we’ll dive into successful examples of A/B tests, how to conduct them, what elements to test, and much more. So let’s get started!

What is A/B Testing

A/B testing involves comparing two versions of something to determine which one performs better, and it is a valuable tool in marketing campaigns to improve conversion rates.

Why A/B Test?

A/B testing, also known as split testing, is a method of comparing two versions of something to determine which performs better.

This technique is commonly used in web design and marketing to optimize campaigns and improve user experience.

The purpose of A/B testing is to help businesses make data-driven decisions that can lead to increased conversions and revenue.

By creating variations and measuring the results, organizations can identify what works best for their audience and adjust their approach accordingly.

Types Of A/B Testing

In the world of web optimization, there are a plethora of A/B testing methods available for businesses to bolster their website or app performance.

One such method is landing a single page with A/B testing, a unique approach that entails crafting two disparate versions of a landing page and meticulously scrutinizing the conversion rates between the two variants.

Another ingenious technique is split testing, a methodology that involves concurrently split testing multiple versions of an element, such as a headline or call-to-action button, to discern which iteration performs optimally.

This technique, like many others, empowers businesses with a rich dataset that illuminates what resonates most with their target audience.

In addition to these time-honored methods, there are other equally compelling A/B testing strategies.

For example, multivariate testing, which probes the interaction between multiple elements of a webpage, is a game-changer for web optimization.

Similarly, funnel testing, which delves deep into user behavior across various pages in a purchase process, is a potent tool for identifying bottlenecks and mitigating potential drop-off points, ultimately bolstering the conversion rate.

The key takeaway here is that A/B testing is a multifaceted and dynamic discipline, with numerous techniques that businesses can harness to test and refine their web optimization strategies.

Through ongoing experimentation and the collection of real-time test results, businesses can hone in on what truly works for their target audiences, optimizing their website or app performance, and driving growth.

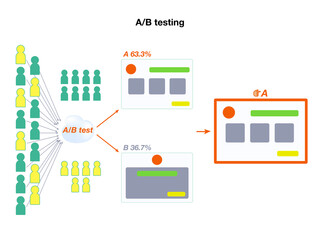

How To Conduct A/B Testing

To conduct an A/B test, you need to identify a page or element on your website or app that you want to improve, such as a landing page or call-to-action button.

You then create two different versions of the page, with only one variable changed between them – this could be anything from the color of a button to the text in a headline.

Next, you randomly divide your audience into two groups and show each group one version of the page.

Collect data on how each group interacts with their respective pages, including metrics like click-through rates and form submissions.

Finally, compare the results for each test version of the page to determine which performed better and make changes accordingly for future iterations.

Examples Of Successful A/B Tests

HubSpot’s Site Search Experiment resulted in a 43% increase in searches per user; Groove’s Landing Page Redesign boosted their conversion rate by 30%; Dropbox’s Video Experiment saw an increase of 10% in signups for their business product.

HubSpot’s Site Search Experiment

HubSpot, a leading inbound marketing and sales platform conducted an A/B test on their site search feature to determine which version would perform the best among users.

The two versions tested included one with a larger search bar and another that was more minimalist in design.

The results of the test showed that the larger search bar version had a 27% increase in searches per visitor compared to the minimalist version.

This insight provides valuable information for HubSpot’s website design and optimization decisions moving forward.

Overall, this example of a successful A/B test highlights the importance of testing even small components of a website, such as site search features, to gain insights into user behavior and preferences.

Groove’s Landing Page Redesign

In a bid to optimize their conversion rate, Groove, a distinguished customer support software firm, embarked on a groundbreaking A/B test to experiment with their landing page design.

With a view to refining the visual appeal of their website, Groove deployed two distinct versions of the same landing page, each bearing unique variations in design components, including background images and font style.

Following an extensive experimentation exercise spanning a few weeks, Groove recorded a phenomenal upsurge in their conversion rate.

Specifically, the new version of their landing page precipitated an astronomical 46% hike in their conversion rate, catapulting it from a meager 2.3% to an impressive 4.3%.

This A/B testing example epitomizes the transformative potential of even the most minute variations in design elements on user engagement and conversion rates.

Armed with invaluable data culled from real-time testing, Groove was adeptly positioned to make informed decisions about which design elements resonated most with their target audience.

In sum, this case study underscores the indispensability of incessantly conducting tests and tweaking website design based on data-driven results, rather than hazarding blind guesses as to what would be most effective for users abandoning visitors or customers.

Dropbox’s Video Experiment

Dropbox’s Video Experiment is an example of the power of A/B testing when it comes to video marketing.

The company decided they wanted to increase user engagement with their product tour video, but they didn’t know what changes would be most effective.

They decided to create two variations of the same product tour: one version had a more detailed voiceover, and the other used text overlays in addition to a shorter voiceover.

By conducting this test, Dropbox found that users were significantly more engaged with the variation that included both text overlays and a shorter voiceover.

This allowed them to optimize their video content for maximum engagement and ultimately led to increased conversions on their website and offline marketing.

This test’s success demonstrates how important it is for businesses to continually test different elements of their marketing strategies using A/B testing techniques.

Wall Monkeys’ Image Experiment

Wall Monkeys, a company that sells wall decals and murals, conducted an A/B test to determine which version of their product images was more effective in driving conversions.

The control version featured product images on a white background, while the variant featured lifestyle shots of the products in use.

The result? Wall Monkeys found that the variant with lifestyle shots had a 129% increase in conversions compared to the control.

By showcasing their products in real-life settings, Wall Monkeys was able to better connect with potential customers and increase engagement.

This example highlights the importance of testing different variations when it comes to visual elements like product images.

By experimenting with different approaches and analyzing the results, businesses can optimize their website for their target audience and ultimately drive more sales.

Humana’s Site Banner Experiment

Humana, a well-known insurance carrier, conducted ab test example of a simple yet successful A/B test on their banner.

They tested two different banners on their homepage to see which design and call-to-action was more effective.

The winning version resulted in a 433% increase in clicks and an impressive 1,426% increase in conversions.

This A/B test example by Humana has been included in various articles and case studies on A/B testing, indicating its effectiveness and impact.

It’s a great reminder that even small changes such as tweaking the home page banner can lead to significant improvements in conversion rates and revenue.

By testing elements like this regularly through A/B tests, businesses can gain valuable insights into customer behavior and continuously improve their marketing strategy for better results.

Elements To Test In A/B Testing

In A/B testing, businesses can test various elements on their website and landing pages such as headlines, design layout, button colors and placement, forms and fields, calls-to-action, and more to improve conversion rates.

Landing Pages

Landing pages are a crucial element in any marketing campaign, and A/B testing of multiple pages allows you to optimize them for better conversion rates.

By comparing two versions of a landing page, businesses can see which design and content elements work best with their target audience.

Elements that can be tested on landing pages include headlines and copy, calls-to-action, forms, and fields, button colors and placement, among others.

In A/B testing for landing pages, it’s essential to test one variable at a time to get accurate results. Having a control for more than one element per page is also important to measure the effect of changes made.

Testing both big and small variables is recommended as it can lead to more significant improvements in conversions over time.

Additionally, ensuring that your sample size is large enough before concluding the test leads to more reliable data collected from the experiment with statistical significance.

Calls-to-Action

Calls-to-action (CTAs) are essential in A/B testing as they can significantly impact your conversion rates more conversions.

By using different variations of CTAs, you can determine which one works best for your target audience.

It is crucial to test not only the copy but also the color, placement, and size of your CTAs to see how these factors affect user engagement.

A surprising result achieved through A/B testing was the ability to lift conversions by merely changing a CTA’s verb tense from future to present.

This small tweak in language resulted in a 90% increase in clicks on a CTA button. Therefore, it is always worthwhile to run tests with different variations of your CTA language and design elements to ensure that you are maximizing valuable real estate on your website or app for optimal results.

Headlines And Copy

When it comes to A/B testing, headlines and copy are crucial elements that can make or break a marketing campaign.

Testing different variations of headlines and copies can help businesses identify which versions resonate best with their target audience.

By analyzing the test results, companies can then optimize their messaging for better user engagement.

One example of successful A/B testing regarding headlines and copy is Dropbox’s video experiment.

The company tested two versions of their homepage – one with a screenshot of their product demo video and the other with the actual product demo video embedded on the page.

After running the test, they discovered that the variant with the embedded video had a 10% increase in conversions compared to the control version.

This demonstrates how small changes like adding a video can have a significant impact on conversion rates.

Design And Layout

In A/B testing, the design and layout of a webpage or app screen can have a significant impact on user behavior.

Testing different variations of design elements such as font style, font size, background images, and visual elements can help determine which version performs better in terms of user engagement and conversion rates.

One example of successful A/B testing for design was conducted by Wall Monkeys. They tested two versions of their website homepage with different variations in the navigation bar and call-to-action buttons. The first test run alone resulted in an 11% increase in sales for the winning version.

When conducting A/B tests for design and layout, it’s important to focus on valuable real estate such as the homepage, product pages, checkout page, and signup process.

Test one variation at a time while ensuring statistical significance is met before drawing conclusions from test results.

Continuously testing new ideas based on data collected from previous tests run within a real-time environment will make business sense over time.

Forms And Fields

When it comes to A/B testing, web forms, and fields are crucial elements that can greatly impact conversion rates.

By testing different variations of forms, businesses can determine what fields or form styles resonate best with their target audience.

For example, one test may focus on whether potential customers are more likely to complete a shorter form with fewer fields versus a longer form with more detailed questions.

Fields within web forms are also key elements that should be tested in A/B testing. By experimenting with different placeholder text, font sizes, and input styles within these fields, businesses can optimize the overall user experience and increase conversions.

In fact, research has shown that simplifying placeholder text within web forms can lead to higher completion rates and ultimately result in more valuable data collected from consumers.

Button Colors And Placement

Button colors and placement are crucial elements to test in A/B testing. Testing different button colors can have a significant impact on conversion rates.

For example, a well-known experiment showed that changing the color of a CTA button from green to red increased conversions by as much as 21%.

Additionally, testing different placements of buttons on landing pages or checkout pages can also lead to more sales and more form submissions too.

When thinking about button placement, it is important to consider valuable real estate on your website.

Placing buttons above the fold signup page and in prominent locations like the navigation bar or home page can increase user engagement and click-through rates.

However, it’s essential to run tests with real-time data collected from your target market before making any permanent changes.

By conducting A/B tests with Convert’s tool or other platforms, you can gauge what works best for your business goals and potentially boost conversion rates through optimal button colors and placement.

What Are The Benefits Of A/B Testing In Marketing?

A/B testing provides numerous benefits for marketing, including increased website performance and user experience, improved conversion rates and revenue, gaining insights into customer behavior, and understanding the impact of changes.

Read on to learn how you can implement A/B testing for your business success!

Increasing Website Performance And User Experience

A/B testing is a crucial tool for increasing website performance and user experience. By comparing two variations of a web page or asset, businesses can optimize site design, functionality, and content for their target audience.

Conducting A/B tests to determine the effectiveness of specific elements such as CTAs, forms, headlines and copy, button placement and color help improve overall user engagement.

The benefits of A/B testing in marketing are numerous when it comes to increasing website performance and user experience.

It not only helps identify what works best with your customers but also gives you valuable real estate data collected from visitors that would have otherwise abandoned your site without the conversion rate.

This method provides companies with insights needed to make informed decisions about their online store presence while reducing risks associated with guessing how users will react to a new design or feature.

Improving Conversion Rates And Revenue

A/B testing is a powerful tool in improving conversion rates and revenue for online businesses. By comparing different versions of landing pages, calls-to-action, headlines and copy, design and layout, forms and fields, button colors and placement among other elements, A/B testing can help increase the effectiveness of marketing campaigns.

In fact, Dropbox’s video experiment resulted in a 10% increase in sign-ups while Humana’s site banner experiment led to a 433% increase most traffic in clicks.

Through A/B testing, businesses can gain valuable insights into customer behavior that enable them to optimize their websites accordingly.

For instance, Wall Monkeys’ image experiment revealed that adding high-quality images to product pages increased user engagement by more than 300%.

By continuously running A/B tests on various elements of their website or marketing strategy using statistical significance and real-time environments among other best practices outlined above; business owners can drive better results that will have long-term success on their bottom line.

Gaining Insights Into Customer Behavior

A/B testing provides valuable insights into customer behavior that can be harnessed to improve marketing strategies and increase conversion rates.

By evaluating how different elements of a webpage or marketing asset perform with your target audience, you can collect data on their preferences and behaviors that can inform future optimization efforts.

For instance, A/B testing can help determine which variations of ad copy or design are most effective at capturing user attention and generating clicks.

Additionally, by tracking how users engage with landing pages and forms during the purchase process, businesses can identify pain points in the customer journey and refine their approach to optimize for conversions.

Ultimately, gaining insights into customer behavior through A/B testing is an essential step towards creating more impactful marketing campaigns that resonate with your target audience.

Understanding The Impact Of Changes

When conducting A/B testing, it’s important to understand the impact of the changes you make. Even small tweaks to a landing page or call-to-action can have a significant effect on conversion rates and user engagement.

For example, changing the wording or color of a button could mean the difference between someone clicking through or abandoning your website.

By gaining insights into how specific changes impact user behavior, businesses can optimize their marketing campaigns and improve overall performance.

Some surprising results might even challenge assumptions about what works best with target audiences. With careful analysis of test results, companies can refine their marketing strategies and ultimately see more traffic, sales, and success.

Best Practices For A/B Testing

To ensure effective A/B testing, it is best to define clear goals and metrics, test one variable at a time, use statistical significance, test on real users, learn from results, avoid biases and continuously test.

Defining Goals And Metrics

When it comes to A/B testing, one of the foremost priorities is to establish lucid goals and metrics that underpin the entire experiment.

This ensures that the test is laser-focused on enhancing performance in specific areas, such as boosting conversions or enhancing user engagement.

Crucially, businesses must cherry-pick metrics that are both relevant to their unique business model and seamlessly align with their overarching marketing strategy. Moreover, setting quantifiable targets serves as an indispensable yardstick for gauging the efficacy of the test.

For instance, if the objective is to augment form submissions, it behooves businesses to set specific numerical targets for each variation being tested.

This meticulous approach fosters more precise measurement of results and precludes premature conclusions based on mere conjecture.

By defining goals and metrics before the commencement of the A/B testing process, businesses can set themselves up for an optimal outcome, and make cogent decisions based on data-driven results.

Testing One Variable At A Time

One important best practice for A/B testing is to only test one variable at a time. This means keeping all other elements of the landing page, email campaign, or ad consistent and changing just one element such as the headline or button color.

By isolating variables this way, it becomes easier to determine which changes have the greatest impact on conversions.

Testing one variable at a time also helps avoid confusion when analyzing test results. If multiple variables are changed simultaneously and there is an uptick in conversions, it can be hard to determine which change was responsible for the increase.

Focusing on testing single variations makes it simpler to recognize what alterations work best with your audience and implement more successful marketing campaigns or purchases from your website, thus increasing your revenue potential.

Using Statistical Significance

In A/B testing, statistical significance is crucial to ensure accurate and reliable results. It refers to the likelihood that the observed differences between two versions of a page or element are not due to random chance.

A high level of statistical significance indicates that the changes made in one version had a significant impact on user behavior, while a low level suggests that there were no significant differences.

To achieve statistical significance, it is important to set an appropriate sample size that represents the target audience and generates enough data for analysis.

Additionally, it is necessary to define clear metrics beforehand and use proven statistical methods such as p-values or confidence intervals.

By using these methods, marketers can confidently determine which version works better with their target audience and improve website performance over time with more informed marketing decisions based on solid data analysis.

Testing On Real Users

One critical aspect of conducting A/B tests is to test on real users. This step involves showing two variations of a resource to a panel of users to determine the version that delivers the biggest impact and achieves goals.

By testing on real users, you can gain more insights into how your target market interacts with your website or product.

Additionally, testing on real users allows you to observe user engagement in a real-time environment, which helps you make data-driven decisions and improve conversion rate optimization.

It’s important to note that running tests on real users requires accuracy when it comes to sample size in order for results gathered not be misleading.

Testing on Real Users will facilitate growth as it gives businesses valuable information about targeting their audience and making growing business sense thanks to improving lead magnet conversion rates by focusing only where useful improvements can be made from data collected during these tests.

Learning From Results

Learning from A/B test results is crucial to improving the effectiveness of marketing campaigns. The data collected during an A/B test can give marketers a deeper understanding of their target audience and which elements resonate with them the most. By analyzing the results, businesses can identify areas that need improvement and make changes accordingly.

It’s essential to remember that even failed experiments can still provide valuable insights. Examining why a particular variation did not perform well allows businesses to refine their marketing strategy further. It also helps avoid making similar mistakes in future tests while providing new ideas for what could work better in future iterations. Ultimately, learning from A/B testing results leads to more informed decision-making and better overall performance.

Avoiding Biases And Testing Continuously

In order to achieve accurate and reliable results with A/B testing, it is crucial to avoid biases and continuously test. One way of doing so is by analyzing different traffic segments, such as returning users or mobile users, instead of solely focusing on core metrics like overall conversion rates. This allows for a more nuanced understanding of how different variations perform among specific subsets of the target audience.

Furthermore, it’s important to test continuously rather than just once in a while. By running tests frequently and consistently, it becomes easier to track progress over time and make informed decisions based on data collected from previous experiments. Testing regularly also allows for quick adaptation to changes in customer behavior or market trends, ensuring that marketing efforts remain effective and up-to-date.

Challenges Of A/B Testing

Some challenges of A/B testing include having a large enough sample size, inaccurate results, and limited testing opportunities. However, these can be overcome by using statistical significance to determine accurate results, running tests on real users, and continuously testing to gain more insights. To learn more about the power of A/B testing and how it can improve your own business owner’s marketing strategy, read on!

Sample Size And Time Resources

One of the biggest challenges in A/B testing is determining the appropriate sample size and testing duration. Many factors can affect this decision, including traffic volume, conversion rate, and desired confidence level. Evan Miller’s sample size calculator is a popular tool that helps marketers determine how many visitors they need to test before drawing reliable conclusions.

However, it’s important to note that relying solely on calculators or fixed sample sizes may not always provide consistent results. In fact, A/B testing with small samples (e.g., 50-100 people) can lead to false positives or negatives due to statistical noise. Marketers should consider other factors such as test complexity, site traffic patterns, and user behavior when deciding on their sample size and test duration.

Inaccurate Results

One of the biggest challenges in A/B testing is obtaining accurate results. Even a small sample size can impact the validity of test results. Additionally, mishandling post-test segmentation can also lead to inaccurate conclusions.

It’s important to avoid ‘sample pollution’ as well which refers to an unwanted influence on your samples or data used while conducting the test. To ensure accurate results, always run tests for an appropriate amount of time and make sure that you have a clear hypothesis that you’re testing against. These issues with inaccurate results continue to be some of the most pressing challenges facing businesses when it comes to A/B testing today.

Limited Testing Opportunities

Limited testing opportunities can be a big challenge for businesses seeking to conduct A/B tests. There are several reasons for this, including limited website traffic and insufficient time or resources to run tests on multiple pages or elements. However, it’s important to remember that even small-scale tests can yield valuable insights into customer behavior and preferences.

One way to overcome this challenge is to focus on high-value areas of your website, such as the home page or checkout page and process. By prioritizing these areas, you can maximize the impact of your testing efforts even in the face of limited opportunities. Additionally, consider partnering with a digital agency like Kelowna-based Shop Medicore Plans that specializes in conversion rate optimization (CRO) and has experience running effective A/B tests across different industries and platforms.

A/B Testing For Business Growth And Success

A/B testing is a vital tool for business growth and success. By comparing two versions of a website or marketing asset, businesses can make data-driven decisions to optimize their campaigns based on real-time feedback. This technique helps companies increase conversions, improve user experience, and gain valuable insights into customer behavior.

In today’s digital landscape, where competition is fierce and consumer preferences are constantly changing, A/B testing has become a necessary element of any marketing strategy. Companies that use this technique can stay ahead of the curve by understanding which variations perform better and making informed decisions about how to design websites or launch ad campaigns. It’s also an effective way to experiment with new ideas without risking too much time or money upfront.

Conclusion: The Power Of A/B Testing And How To Implement It For Your Business Success

A/B split testing really is a game-changer when it comes to marketing strategies. By conducting split tests, you can uncover hidden insights into your target audience that could lead to more engagement and more conversions.

From landing pages to call-to-action buttons, A/B testing provides valuable real estate for experimentation that can have a profound impact on your business success. With the help of statistical significance and calibrated experimentation, harnessing the power of A/B testing has never been easier. So go ahead – run some tests and see what surprising results you may uncover! We also have lots of case studies you can read through to see what’s worked for us in the past.