4 A/B Split Testing Mistakes Newbies Should Avoid

What if I told you, you could find conversion rate increases with every single A/B split test by avoiding a few newbie mistakes.

It’s true. But that’s the tragic feast or famine reality of A/B testing. Get it right, and your affiliate site is now a CRO BEAST that literally forces customers to put money in your pocket. Get it wrong, and you could invest money in the wrong changes, miss out on major opportunities, or even doom your website to failure.

The ironic thing is the mistakes that hurt the most are sometimes the easiest to fix; a missed detail here, a wrong hunch there. Sometimes it’s just because you’re too eager to find the miracle fix that can double your sales overnight.

Remember: Conversion rate optimization checklist doesn’t need to be complicated.

At Convertica, we have run over 1000 tests in the last 12 months. After working with 100s of clients, we have found the 4 most common mistakes people make that kill their earning potential and even ruin their chances of making more sales. By the end of this article, you’ll know exactly which changes I made to my strategy that helped take my success rate with clients to nearly 100% (hey, nobody is perfect. Not even me).

Mistake #1: Not Tracking Enough Sample Data

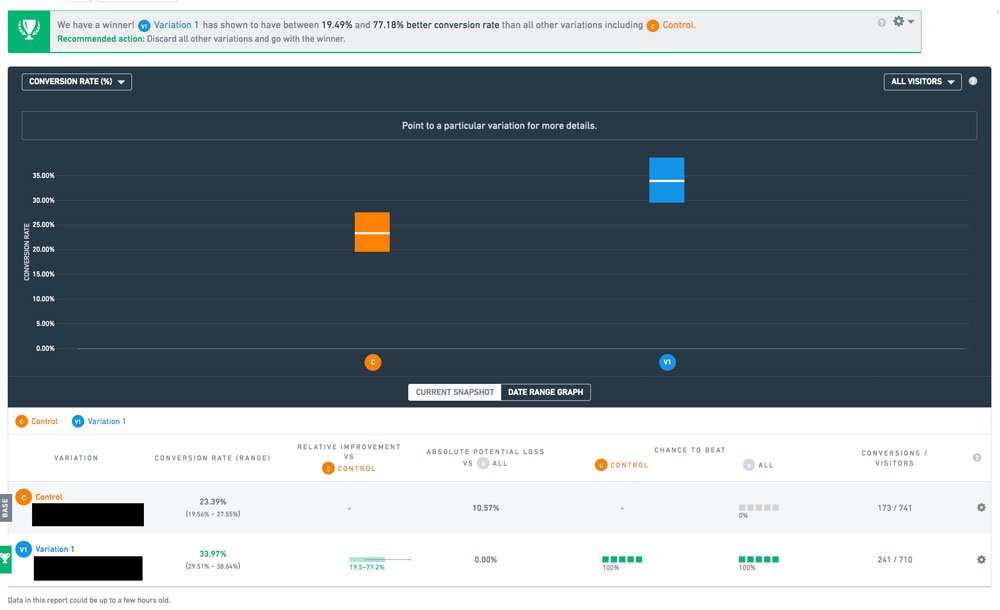

You’ve got to resist the urge to crown a champion too early. I know that feeling: you have a hypothesis, set up your test, click run, and BOOM, right out of the gate the data starts confirming what you knew all along. The variant is pummeling the control into the dust.

Chill.

There are a million and a half reasons why the variant can be outperforming the control, and most have nothing to do with the changes. Time of day, week, month, or year; a sudden mood change; random luck. This is where statistical significance comes into play. Statistical significance is the probability that your result is NOT due to random chance (variables outside of your control). Industry standard is 95% + statistical significance to be certain. This means if your test confirms a winner with a statistical significance of 95%, that there is only a 5% chance that this result is due to chance.

We don’t even start to draw conclusions until around a minimum of 1000 views. The more iterations of the process, the more you eliminate statistical anomalies, the closer to the truth you get.

Fix the Mistake: Don’t jump to conclusions. Keep the test going until you reach a high degree of statistical significance.

Even VWO can call a winner too early. Generally speaking though, the clearer the winner the earlier the test will be concluded with statistical significance.

Mistake #2: Not Refining Your Data Analysis

You’ve run your first test, anxiously waited a few long weeks, and now the results are in…..

-0.48% decrease overall.

What? Is that really possible?

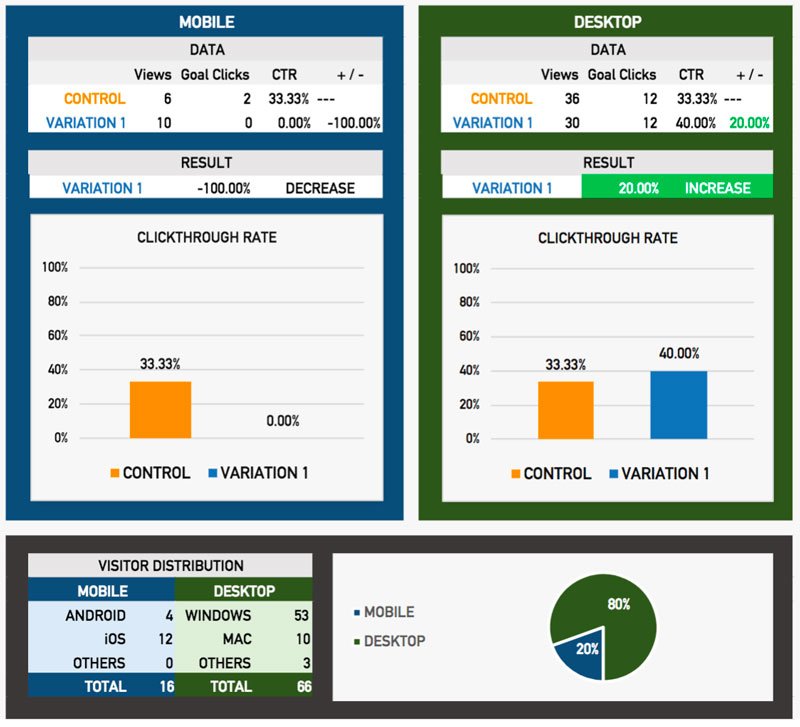

It is, but the chances are it only appears that way, and you just need to take a closer look to find the gold. If you pull the data out of VWO (or your preferred AB testing tool) and do some digging, you will find the devil is in the details.

We see it often: mobile and desktop results often cancel each other out. That -0.48% decrease overall is actually just -0.48% averaged across the total visitors and doesn’t account for the nature of those visitors (in this case, the device they are using).

We see it often: mobile and desktop results often cancel each other out. That -0.48% decrease overall is actually just -0.48% averaged across the total visitors and doesn’t account for the nature of those visitors (in this case, the device they are using).

A test that initially showed no change to visitors actually held valuable data. The changes caused a conversion increase for mobile device visitors and a decrease to desktop ones. That shouldn’t come as a surprise, though-what works on desktop doesn’t always work on mobile (fat fingers make life tough).

So now, we install a device-only plugin and show the changes only to mobile, and suddenly you get a 22.21% overall website conversion rate increase from a split test that you thought was no good.

This technique alone, will increase your chances of success with split testing to nearly 100%.

Are you starting to see the power of CRO?

We see this ALL the time with tests we run, and it caused us to refine our process. Here’s how it works now:

1. Run split test for all visitors

2. Segment the concluded test data into mobile and desktop and analyze each separately

3. From the findings, run a test to mobile-only traffic and another to the desktop. Check our CRO blueprint here…

This has literally 10x’d our success for our clients. We have an almost 100% success rate on all our tests now by segmenting the data and running separate tests to each device. Refining the process like this reveals far more valuable data.

Fix the Mistake: Testing only for desktop will reveal what works best on desktop (remember, CRO doesn’t have to be difficult). Same for mobile. Refine your processes by segmenting audience so you don’t get results that cancel each other out.

I have gone through in detail how to do device level testing.

Mistake #3: Focusing on the Wrong Areas to Test

Opportunity costs for A/B testing are astronomical. Each test should be run for 500-1000 views on each variation. It all comes down the the difference in conversion rates for each variation.

If you own an affiliate site, this means that testing the wrong thing can screw up a whole month of sales. You should never test too many elements at the same time (I’ll cover this in detail next), so make sure you choose the most effective ones first.

I have discussed this before in detail. When you are testing, make sure you are testing the areas that have the highest impact on conversion rates on the page. These are some of the lowest hanging fruit for A/B tests on your affiliate site are:

● Headlines

● Calls to Action

● Images

● Product Descriptions

● Prices

OK Kurt, but how do I decide which area to test first?

Tip: The best way to figure out where to run the juiciest A/B tests? You don’t decide. Let your visitors decide. Run heatmaps (Hotjar or some other software) for a few weeks and see where the hotspots for testing are. Start with this article on the best heat map tools for websites.

Fix the Mistake: Be sure to test the elements that matter most to avoid high opportunity costs. Run heatmaps to hone in on the best places to start testing rather than wasting time on elements that don’t matter (looking at you, button color!).

Mistake #4: Split Testing Too Many Things at One Time

The same is true for testing too many areas on your website at the same time as well. Once you get that first taste of victory, it’s natural to want to test everything and go overboard.

Resist.

When you test multiple elements on the same page, you run a major risk of polluting the results, and this is a poor scientific process. The two tests can “cross pollinate” and leave you not knowing which change is responsible for the results. The best-case scenario if you do this is that you confuse the results. The worst case? All your tests become invalid.

EXAMPLE

You decide to run two A/B test at the same time.

Test 1: You change all of the text links in the comparison table to graphics buttons. Result: + 30%.

Test 2: You add images to the mini-reviews below the comparison tables. Result: -23%.

The results now give off a distorted view of how the campaign is performing. In fact, you can’t actually be certain that the results aren’t polluted (meaning the changes from the first test are affecting conversions in the second). Be patient and test one thing at a time. In case the worst happens you can click here and get a quote from our professionals.

Fix the Mistake: You might think running multiple tests will help you grow faster, but it’s a dangerous game if you don’t know what you’re doing. Don’t test too many elements at one time. Unless you’re a bad-ass pro at CRO (and even if you are) we suggest you stick to testing one single thing at a time.

The road to affiliate site CRO hell is paved with good split-testing intentions. I recommend keeping things as simple as possible. Don’t make CRO harder than it has to be.

Focus on the right elements, segment your audience, and don’t stop the test too soon. Lather, rinse and repeat. This is exactly how we increased our success rate 10x, one win at a time, and it isn’ hard at all. Fixing just one of these simple mistakes can lead to massive upticks in revenue.

What do you think are the most disastrous A/B testing mistakes? Also, check out our conversion rate optimization service, the end goal of our service is to turn qualified users into satisfied customers.

To get started, please enter your details below

To get started, please enter your details below

0 Comments